CyberSecurity of 5G/6G Networks

5G and beyond security research is an important area of interest for academics and researchers. This research aims to ensure that 5G networks and beyond are secure and safe from cyber-attacks. This research is important because 5G networks will be used for various critical applications, such as healthcare, transportation, and energy. 5G and beyond security research will help to ensure that these applications are secure and that users can trust the networks.

So far, we have developed security methods for AI-based

- Beamforming

- MIMO systems

- Spectrum sensing

- Channel estimation

- Intelligent-reflective surfaces,

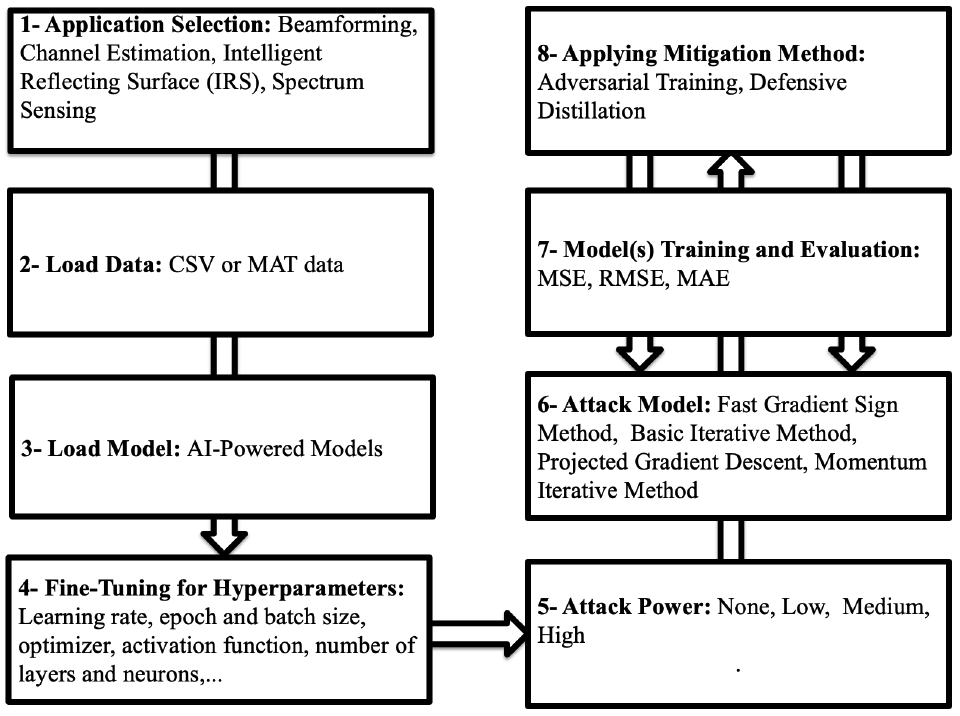

and more which will be extremely useful for the future 5G and 6G networks. We also proposed the first comprehensive security framework for 5G and beyond, which will be very helpful for mobile network operators and other stakeholders to deploy secure 5G networks. My future work includes developing security methods for new 5G and beyond technologies, such as mmWave, massive MIMO, and full-duplex. We also plan to continue our work on the comprehensive security framework to make it even more comprehensive and user-friendly.

Collaborations on 5G and Beyond Security

My research outputs and implementations can be found on the publications page. If you are interested in collaborating on 5G and beyond security research, please do not hesitate to contact me.

Ferhat Ozgur Catak, University of Stavanger, Norway, f.ozgur.catak@uis.no

Research Outputs

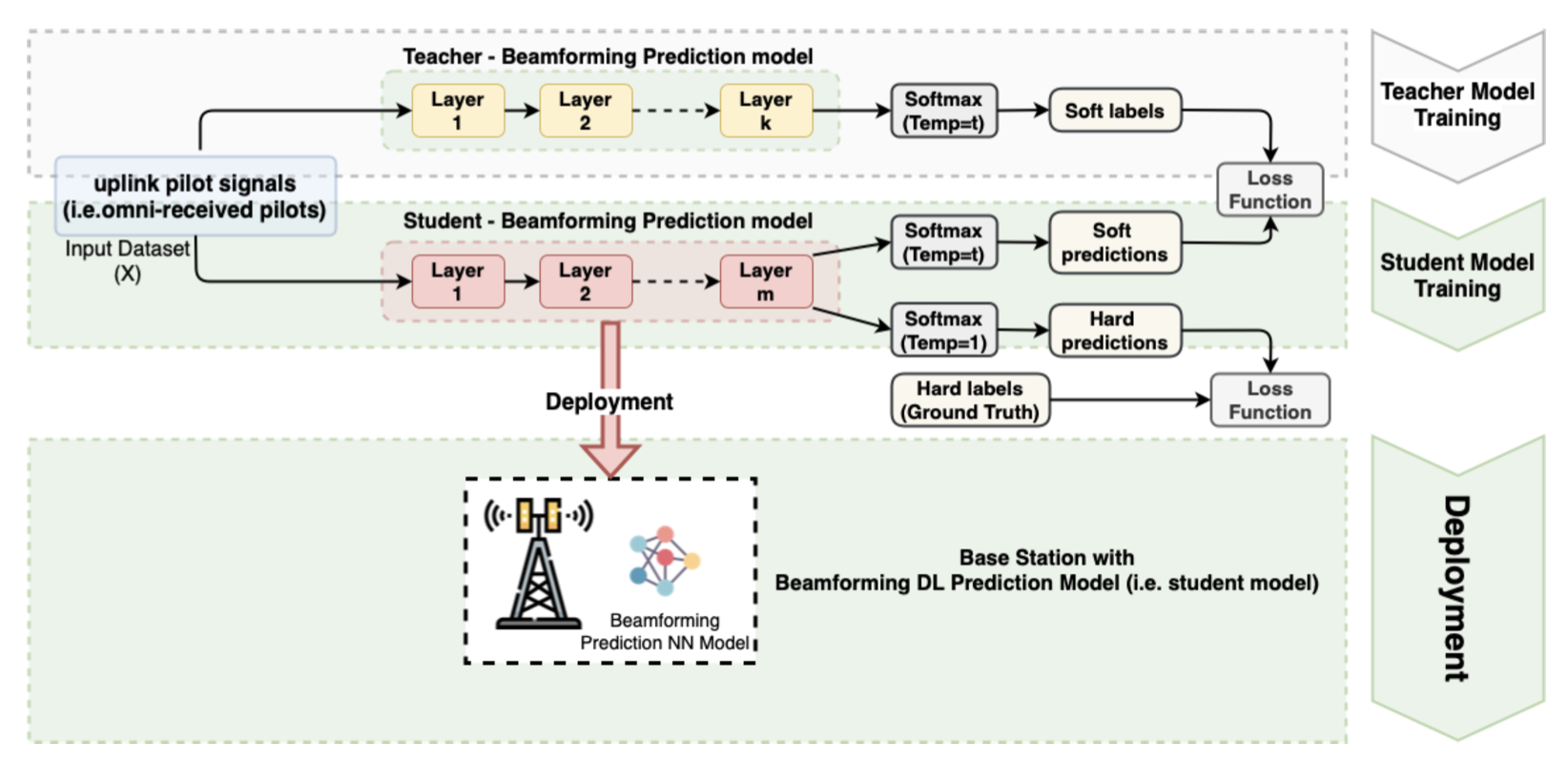

1. Adversarial security mitigations of mmWave beamforming prediction models using defensive distillation and adversarial retraining (International Journal of Information Security 2022)

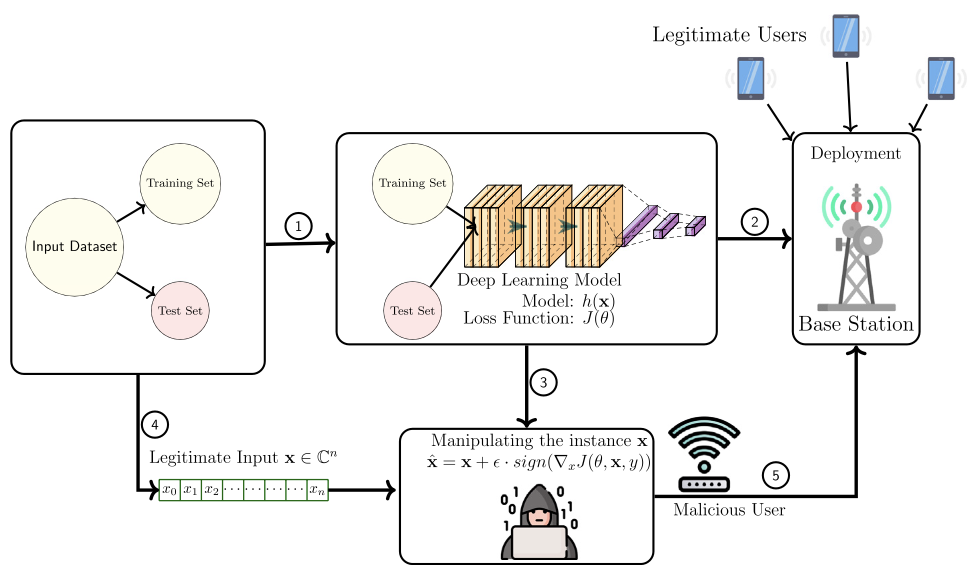

This research talks about how 5G, 6G, and future wireless networks need to make sure their networks are secure. We looked into how computers could figure out beamforming prediction and if it was vulnerable to bad things like cyber attacks. We tested the computer models to see how easy it was to attack them and found some weaknesses. We then found two ways to protect the systems from attacks and tested these methods. The tests show that the new methods can protect the systems from the cyber attacks.

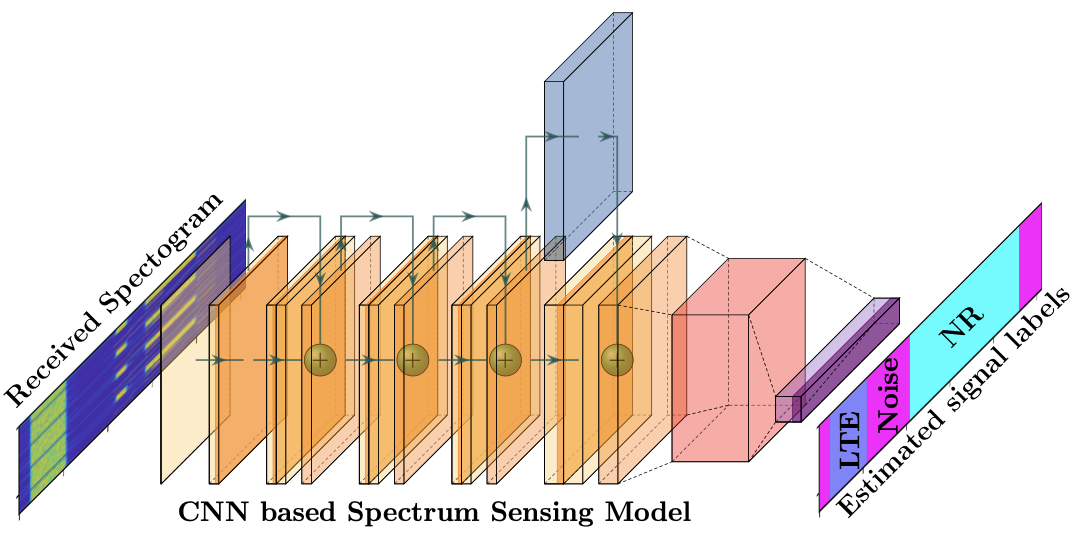

2. Mitigating Attacks on Artificial Intelligence-based Spectrum Sensing for Cellular Network Signals (IEEE GLOBECOM 2022)

This paper abstract discusses the use of AI for spectrum management in cellular networks. It provides a vulnerability analysis of spectrum sensing approaches using AI-based semantic segmentation models for identifying cellular network signals under adversarial attacks with and without defensive distillation methods. The results showed that mitigation methods could significantly reduce the vulnerabilities of AI-based spectrum sensing models against adversarial attacks.

3. A Streamlit-based Artificial Intelligence Trust Platform for Next-Generation Wireless Networks (IEEE Future Networks World Forum, 2022)

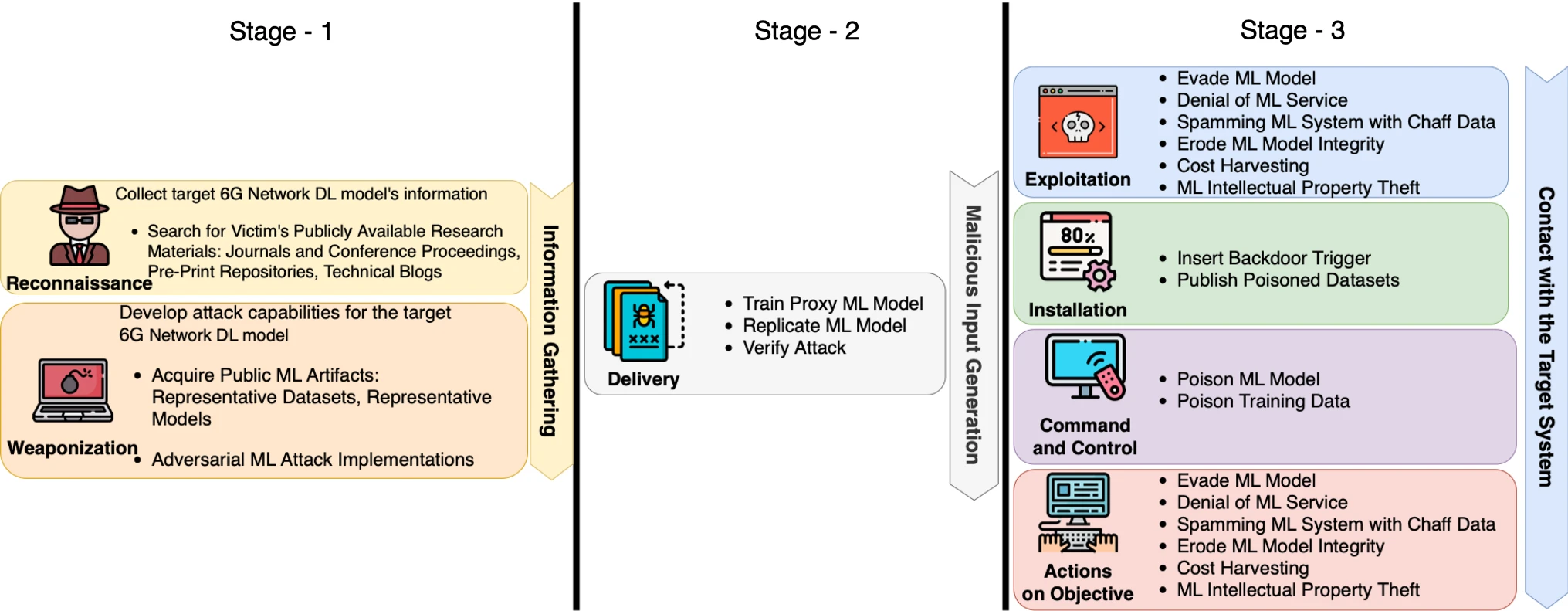

The paper abstract discusses the advantages of using AI algorithms in NextG networks and the importance of a secure AI-powered structure to protect against cyber-attacks. The paper proposes an AI trust platform using Streamlit for NextG networks that allows researchers to evaluate, defend, certify, and verify their AI models and applications against adversarial threats of evasion, poisoning, extraction, and interference.

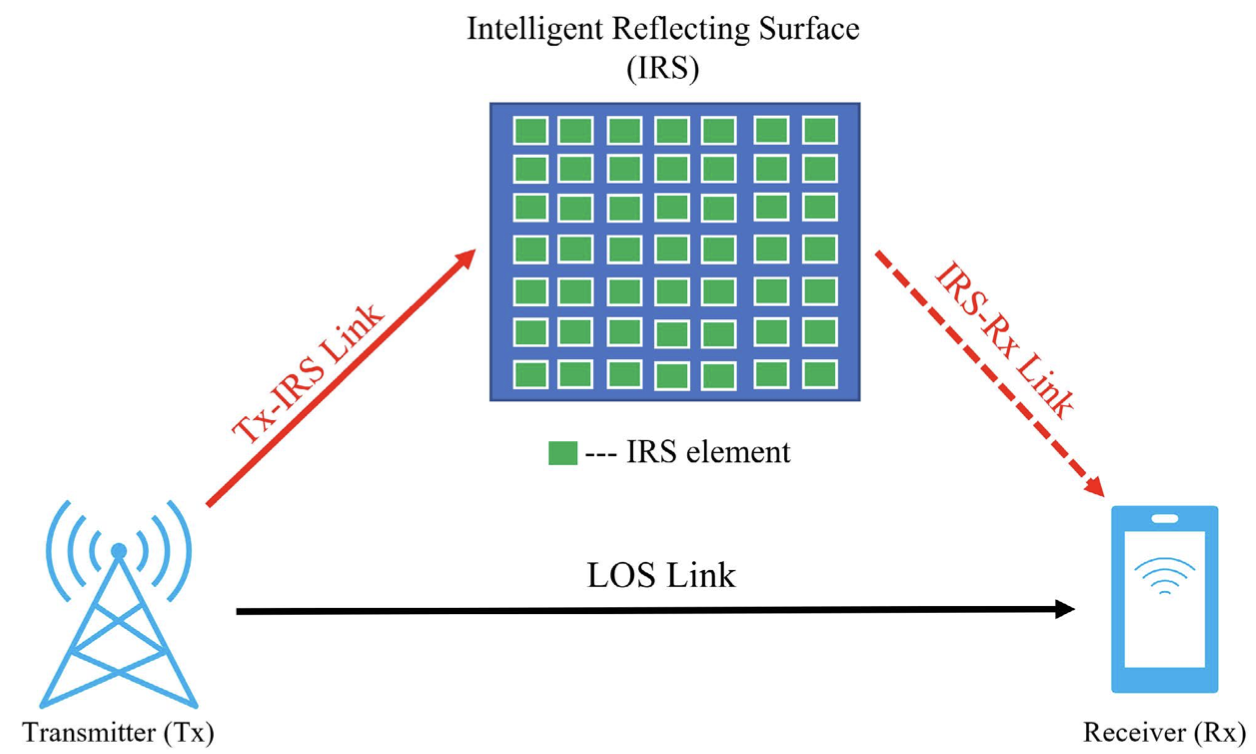

4. Security Hardening of Intelligent Reflecting Surfaces Against Adversarial Machine Learning Attacks (IEEE Access 2022)

This paper focuses on the security threats and mitigation for Artificial Intelligence (AI) powered applications in Next-Generation (NextG) networks. The paper specifically looks at the implementation of AI-powered Intelligent Reflecting Surfaces (IRS) in NextG networks and the vulnerability of these systems to adversarial machine learning attacks. The paper proposes the defensive distillation mitigation method as a way to reduce the vulnerability of AI-powered IRS models. The study’s results indicate that the defensive distillation mitigation method can significantly improve the robustness of AI-powered models and their performance under an adversarial attack.

5. Defensive Distillation-Based Adversarial Attack Mitigation Method for Channel Estimation Using Deep Learning Models in Next-Generation Wireless Networks (IEEE Access 2022)

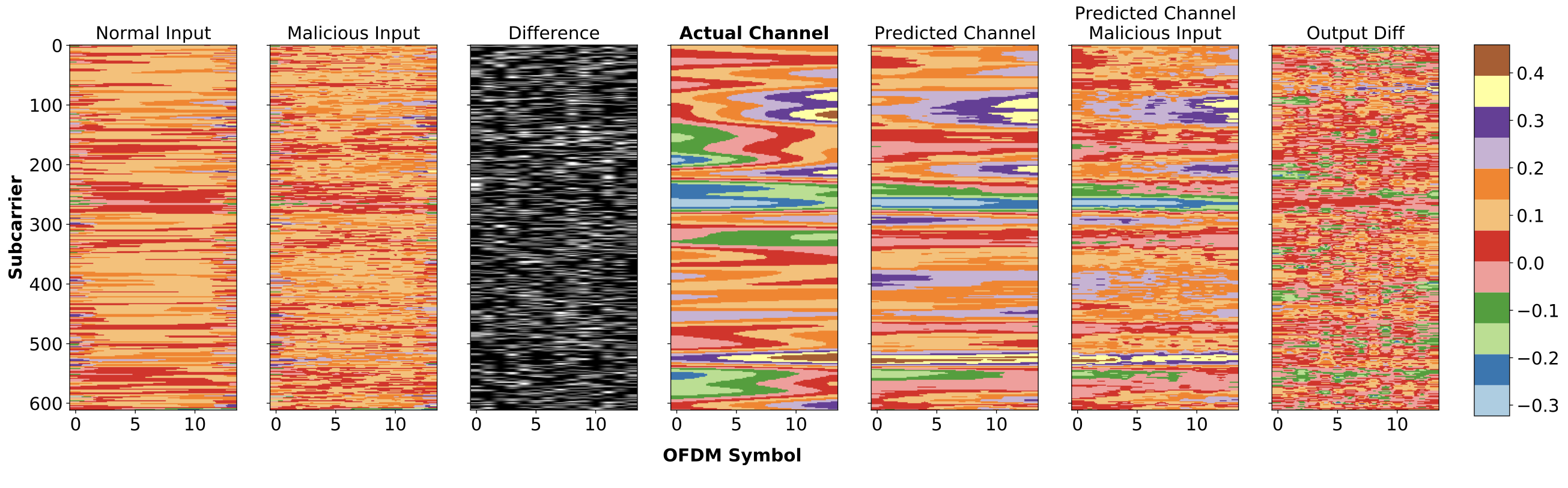

This paper discusses the potential for artificial intelligence in future wireless networks, known as NextG, and the concerns around security and adversarial attacks. The paper proposes a comprehensive vulnerability analysis of deep learning channel estimation models and defensive distillation-based mitigation methods to protect against these attacks. The results indicate that the proposed mitigation method can defend against adversarial attacks.

- GitHub: https://github.com/ocatak/6g-channel-estimation-dataset

- IEEEXplore: https://ieeexplore.ieee.org/document/9888103

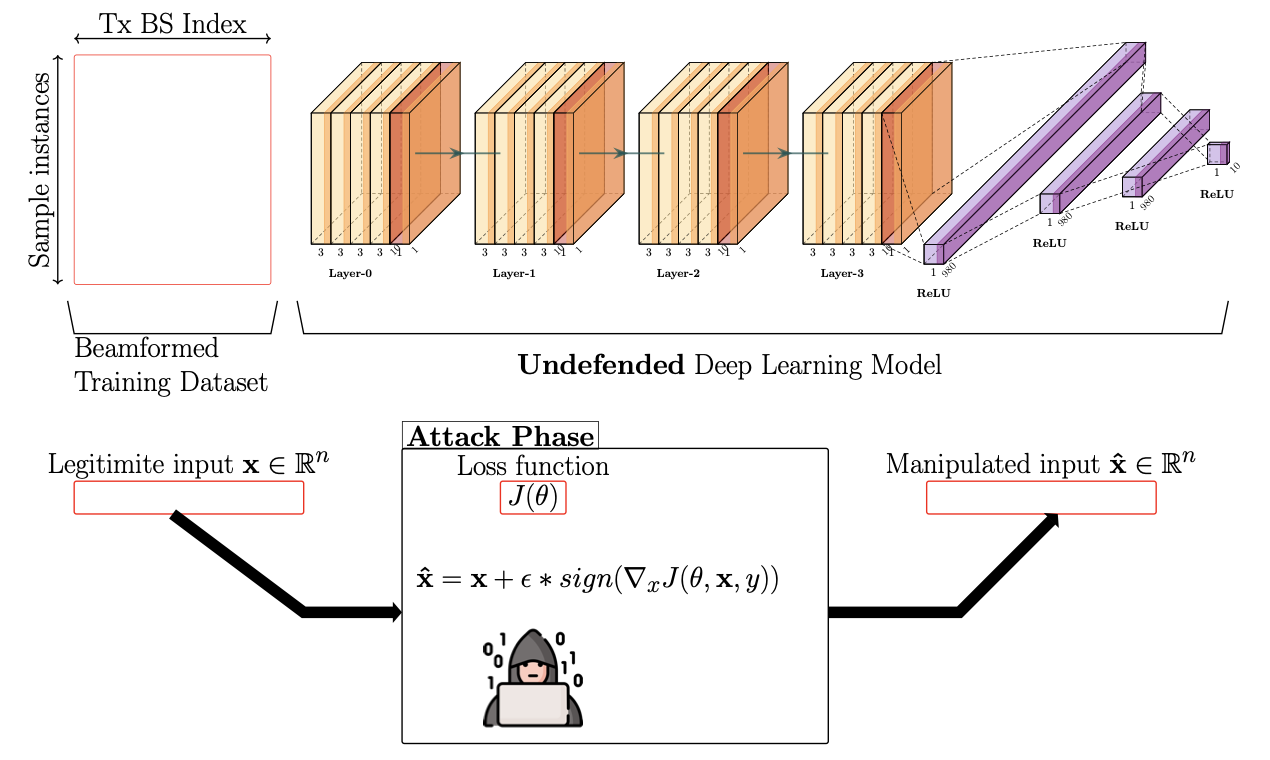

6. Security concerns on machine learning solutions for 6G networks in mmWave beam prediction (2022, Physical Communication)

The paper studies the application of machine learning algorithms to 6G technology and the security concerns that arise from their use. It proposes a mitigation method for adversarial attacks against proposed 6G ML models for the millimeter-wave (mmWave) beam prediction using adversarial training. The main idea behind generating adversarial attacks against ML models is to produce faulty results by manipulating trained DL models for 6G applications for mmWave beam prediction. The paper presents the performance of the proposed adversarial learning mitigation method for 6G security in mmWave beam prediction application, a fast gradient sign method attack. The results show that the defended model under attack’s mean square errors (i.e., the prediction accuracy) is very close to the undefended model without attack.

- Elsevier - Physical Communication: https://www.sciencedirect.com/science/article/pii/S1874490722000155

7. The Adversarial Security Mitigations of mmWave Beamforming Prediction Models using Defensive Distillation and Adversarial Retraining (Under Review, 2022)

This paper presents the security vulnerabilities in deep learning for beamforming prediction using deep neural networks (DNNs) in 6G wireless networks, which treats the beamforming prediction as a multi-output regression problem. It is indicated that the initial DNN model is vulnerable against adversarial attacks, such as Fast Gradient Sign Method (FGSM), Basic Iterative Method (BIM), Projected Gradient Descent (PGD), and Momentum Iterative Method (MIM), because the initial DNN model is sensitive to the perturbations of the adversarial samples of the training data. This study also offers two mitigation methods, such as adversarial training and defensive distillation, for adversarial attacks against artificial intelligence (AI)-based models used in the millimeter-wave (mmWave) beamforming prediction. Furthermore, the proposed scheme can be used when the data are corrupted due to the adversarial examples in the training data. Experimental results show that the proposed methods effectively defend the DNN models against adversarial attacks in next-generation wireless networks.

8. Adversarial Machine Learning Security Problems for 6G: mmWave Beam Prediction Use-Case (IEEE BlackSea Conf, 2021)

This paper proposes a mitigation method for adversarial attacks against proposed 6G machine learning models for the millimeter-wave (mmWave) beam prediction using adversarial learning. 6G is the next generation of communication systems and will use predictive algorithms. The main idea behind adversarial attacks against machine learning models is to produce faulty results by manipulating trained deep learning models for 6G applications for mmWave beam prediction. The mean square errors of the defended model under attack are very close to the undefended model without attack. With the rapid development of deep learning techniques, it is critical to consider security concerns when applying the algorithms.